Can Deep Learning Produce a General Model of Natural Sound Encoding by Auditory Cortex?

Presented By: Stephen V. David, Ph.D.

Speaker Biography: Stephen David is Associate Professor in the Oregon Hearing Research Center at Oregon Health and Science University. Before coming to OHSU, Dr. David completed a postdoctoral fellowship with Shihab Shamma in the Institute for Systems Research at the University of Maryland, College Park. In May 2004, he completed a Ph.D. in Bioengineering at the University of California, Berkeley, studying vision and attention with Jack Gallant. Dr. David’s research interests are in the neural basis of sensory perception, particularly in the auditory system. Current projects use computational approaches to characterize auditory representation by neural populations while manipulating context through associative learning, environmental noise, hearing loss, attention and arousal.

Webinar: Can Deep Learning Produce a General Model of Natural Sound Encoding by Auditory Cortex?

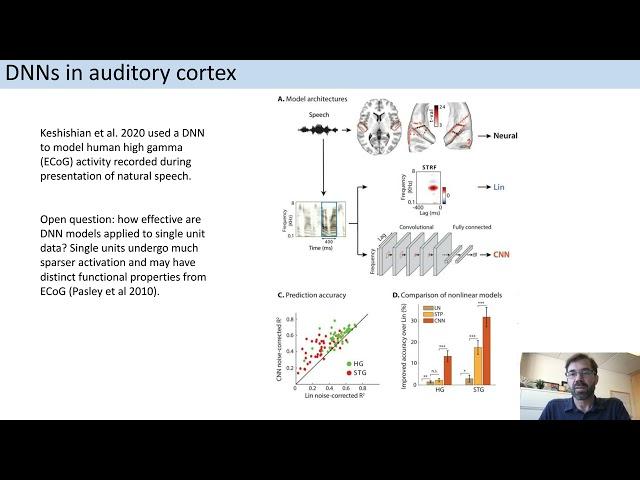

Webinar Abstract: Recent advances in machine learning have shown that deep neural networks (DNNs) can provide powerful and flexible models of neural sensory processing. In the auditory system, standard linear-nonlinear (LN) models are unable to account for high-order cortical representations, but thus far it is unclear what additional insights can be provided by DNNs, particularly in the case of single-neuron activity. DNNs can be difficult to fit with relatively small datasets, such as those available from single neurons. In the current study, we developed a population encoding model for a large number of neurons recorded during presentation of a large, fixed set of natural sounds. Leveraging signals from the population substantially improved performance over models fit to individual neurons. We tested a range of DNN architectures on data from primary and non-primary auditory cortex, varying number and size of convolutional layers at the input and dense layers at the output.

Earn PACE Credits:

1. Make sure you’re a registered member of LabRoots https://www.labroots.com/virtual-event/neuroscience-2021/agenda

2. Watch the webinar on YouTube or on the LabRoots Website https://www.labroots.com/webinar/deep-learning-produce-model-natural-sound-encoding-auditory-cortex

3. Click Here to get your PACE credits (Expiration date – August 25, 2023): https://www.labroots.com/credit/pace-credits/6480/third-party

LabRoots on Social:

Facebook: https://www.facebook.com/LabRootsInc

Twitter: https://twitter.com/LabRoots

LinkedIn: https://www.linkedin.com/company/labroots

Instagram: https://www.instagram.com/labrootsinc

Pinterest: https://www.pinterest.com/labroots/

SnapChat: labroots_inc

Speaker Biography: Stephen David is Associate Professor in the Oregon Hearing Research Center at Oregon Health and Science University. Before coming to OHSU, Dr. David completed a postdoctoral fellowship with Shihab Shamma in the Institute for Systems Research at the University of Maryland, College Park. In May 2004, he completed a Ph.D. in Bioengineering at the University of California, Berkeley, studying vision and attention with Jack Gallant. Dr. David’s research interests are in the neural basis of sensory perception, particularly in the auditory system. Current projects use computational approaches to characterize auditory representation by neural populations while manipulating context through associative learning, environmental noise, hearing loss, attention and arousal.

Webinar: Can Deep Learning Produce a General Model of Natural Sound Encoding by Auditory Cortex?

Webinar Abstract: Recent advances in machine learning have shown that deep neural networks (DNNs) can provide powerful and flexible models of neural sensory processing. In the auditory system, standard linear-nonlinear (LN) models are unable to account for high-order cortical representations, but thus far it is unclear what additional insights can be provided by DNNs, particularly in the case of single-neuron activity. DNNs can be difficult to fit with relatively small datasets, such as those available from single neurons. In the current study, we developed a population encoding model for a large number of neurons recorded during presentation of a large, fixed set of natural sounds. Leveraging signals from the population substantially improved performance over models fit to individual neurons. We tested a range of DNN architectures on data from primary and non-primary auditory cortex, varying number and size of convolutional layers at the input and dense layers at the output.

Earn PACE Credits:

1. Make sure you’re a registered member of LabRoots https://www.labroots.com/virtual-event/neuroscience-2021/agenda

2. Watch the webinar on YouTube or on the LabRoots Website https://www.labroots.com/webinar/deep-learning-produce-model-natural-sound-encoding-auditory-cortex

3. Click Here to get your PACE credits (Expiration date – August 25, 2023): https://www.labroots.com/credit/pace-credits/6480/third-party

LabRoots on Social:

Facebook: https://www.facebook.com/LabRootsInc

Twitter: https://twitter.com/LabRoots

LinkedIn: https://www.linkedin.com/company/labroots

Instagram: https://www.instagram.com/labrootsinc

Pinterest: https://www.pinterest.com/labroots/

SnapChat: labroots_inc

Комментарии:

Learn Rock Mixing from Chris Lord-Alge

SlateDigitalTV

Best Naso - Nipe Nafasi (Official Video)

Best Naso

Krwotok i skaleczenie u dziecka - pierwsza pomoc

Medicover Polska

CRISPRa | CRISPR Activation | CRISPRa Vs CRISPRi |

BMH learning

SCOTLAND - ĐẤT NƯỚC ĐÀN ÔNG TỰ HÀO KHI MẶC VÁY

BLV Hải Thanh Story