Model Distillation

Quantization vs Pruning vs Distillation: Optimizing NNs for Inference

Efficient NLP

5K

15,953

1 год назад

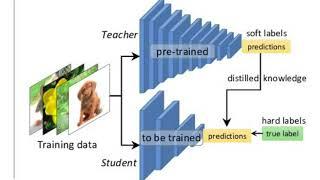

Knowledge Distillation: A Good Teacher is Patient and Consistent

Connor Shorten

6K

20,213

3 года назад

Psychedelicus Mechanismus - AI Generated Psychedelic Animation Video, Art Created by Ai

Aibient

218

728

1 день назад

There's a ton of new AI jobs coming ― It's like 2005 all over again

David Shapiro

8K

26,852

2 дня назад

New Horizons in Generative AI: Machine Learning for Translatable Drug Discovery

Stanford HAI

2K

6,478

8 месяцев назад

AI for Drug Design - Lecture 16 - Deep Learning in the Life Sciences (Spring 2021)

Manolis Kellis

17K

56,537

3 года назад

ICML 2023 Panel: Fostering the Development of Impactful AI Models in Drug Discovery

Valence Labs

515

1,717

11 месяцев назад

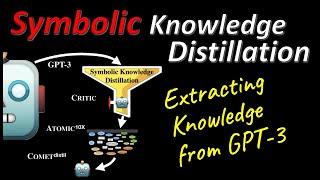

Symbolic Knowledge Distillation: from General Language Models to Commonsense Models (Explained)

Yannic Kilcher

7K

24,357

2 года назад

EfficientML.ai Lecture 9 - Knowledge Distillation (MIT 6.5940, Fall 2023)

MIT HAN Lab

2K

5,283

9 месяцев назад

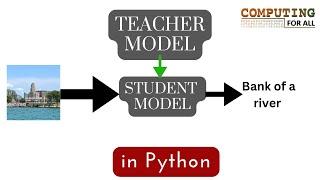

Teacher-Student Neural Networks: Knowledge Distillation in AI

Computing For All

886

2,954

10 месяцев назад

DINO: Emerging Properties in Self-Supervised Vision Transformers (Facebook AI Research Explained)

Yannic Kilcher

35K

118,047

3 года назад

Progressive Distillation for Fast Sampling of Diffusion Models (paper sumary)

DataScienceCastnet

2K

7,025

1 год назад

Сейчас ищут

Model Distillation

Yo Chill Bruh

Nist Control Testing

Build Muscle And

Mctv Mifflin County Television

Thieves Guild Tips

World Vision T625D3

K1Ca

Fish Island Tour

Дедушка Сериал

Shopify Training Course

Vex Korean

Oriental Motor

Notion Vs Evernote 2022

How To Handle Bootstrap Login Or Popup Window In Selenium Webdriver

Message Class

Share Youtube Video On Facebook

Perkosa Mama

Nursery Rhymes Songs

Mod Making Tutorial

Рил Китчен

Switch To Led

Nba 2K21 Mycareer Cut Scenes

Create Image Comparison Slider

Dscr Studio

Label Art

Dan Froelke S Channel

Uninstall Programs

Airtel Dish Please Insert Your View Card Problem Solution

Videoqualität

Fenomen Ifşa

Gtmatrix Speed Optimization

Model Distillation смотреть видео. Рекомендуем посмотреть видео Lecture 10 - Knowledge Distillation | MIT 6.S965 длительностью 1:07:22. Invideo.cc - смотри самые лучшие видео бесплатно