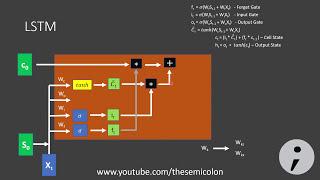

Recurrent Neural Networks (RNN) and Long Short Term Memory Networks (LSTM)

Комментарии:

So if you have N inputs X then you will loop N times producing N states S ?

Ответить

Solves vanishing gradient problem with extra interactions 👏🏻👏🏻

Great insight!!!

Question...

What are the initial values of Cell state and hidden state, where there was no previous input, I mean for the first input

It would much better with actual number then telling X, R etc.

Ответить

Excellent video.

Ответить

Vanishing Gradient problem is still unclear to me.

Ответить

******Sir in lstm , the h(t) is the final ouput or O(t) or y(t)????????*********

Ответить

Sir how we came to know , that whether we want y1 or y2 or both ???

Ответить

Thankyou!!!

Ответить

B does not hold good.

Ответить

How do you account for the fact that earlier states will have a greater influence on the output implicitly. i.e. input 0 effects state 0,1,2,3,4 etc where as input 5 only effects state 6,7,8,9.

Would this be like a word earlier on in a sentence having a greater influence that a word later on? I feel like this behaviour would not be desired? thank you

Great Video

Ответить

This is absolutely confusing. At no point is clear if you are talking of a single neuron or an entire network, or how are the cells connected to a neuron.

Ответить

Gem ❤️

Ответить

The semicolon reminds me the side view of 'EVE' character from Wall-E.

Ответить

Thanks for your help

Ответить

wow..great stuff

Ответить

Bhai you know Hindi???

To me Hindi me hi comment karu

![[20+] FREE LOOP KIT/SAMPLE PACK - "OUT THE WAY" Inspired by Kankan, Yeat, Summrs, Slayworld [20+] FREE LOOP KIT/SAMPLE PACK - "OUT THE WAY" Inspired by Kankan, Yeat, Summrs, Slayworld](https://invideo.cc/img/upload/WW5EbjlTeDR5cFQ.jpg)