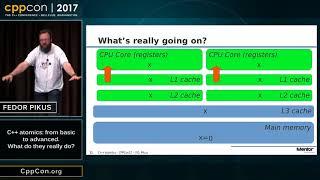

CppCon 2017: Fedor Pikus “C++ atomics, from basic to advanced. What do they really do?”

Комментарии:

"It's hard to write if you don't care about occaisonal bugs; It's really hard if you do"

Ответить

For the 2nd question, memory_order_relaxed just guarantees atomic operation will be performed, but no constraint applied.

Ответить

Finally, this guy made me understand memory ordering. Nice presentation...

Ответить

measurably failed attempt to make a drama at the beginning - it's a different algorithms - you can't compare apples to oranges

Ответить

This talk was superb. Complex topic delivered eloquently. Thanks for that.

Ответить

That was really interesting and useful talk, thanks a lot!

Ответить

Thanks

Ответить

Why the designers of C++ did not made it the way, that each new thread makes a unique copy of all variables in the program, so then such problems never arise?

Ответить

Really love this talk, using 1 hour watching this video is actually more efficient than reading cpp reference using a day :)

Ответить

You let me know more detailed about the atmoic under the hood. When it comes to hardware, the optimize granularity can be really small.

Ответить

The expression "become visible" is just awful.

What Pikus meant by "becoming visible" after an acquire barrier is simply that operations that appear after the barrier in the program are guaranteed to execute after the barrier.

Likewise, "becoming visible" before a release barrier means operations before the barrier in the program are guaranteed to have been executed by the time the barrier is reached.

A little caveat is that for the acquire barrier, operations before the barrier can be moved to execute after the barrier, but operations after the barrier in the program cannot be moved to execute before the barrier is reached. The opposite applies to release barrier.

To be really honest, with this I finally comprehended how threads are important and hence fort, a little intro to parallel computing.

Really getting into the C++ magic stuff

Thank you Mr.Fedor !

Ответить

I figure that atomics work a lot like git. you prepare memory in your local cache ("local repo") and release it ("pushing to a remote origin")

Ответить

Awesome talk; I've watched it several times! I have a question, though:

I understand that unsynchronized reading and writing to the same location in memory is undefined behavior. I also understand that the CPU/memory subsystem moves data in terms of cache lines. What I am still unclear on is what happens if I have two variables in my program that just so happen to be on the same cache line (e.g. two 32-bit ints), but I didn't know that: My program has two threads that each read and write from their own variable, but I, the programmer, thought it was OK because there's no (intentional) "sharing?" If these threads are non-atomically writing to their own variables, do they still have the same problem where they can "stomp" on each other's writes?

Great presentation! That intro was great, LOL

Ответить

All these conferences on cppcon are awesome.

Ответить

Wonderful!!!

Ответить

Excellent. First time I really understand these ideas.

Ответить

Great talk, it's the clearest explanation I ever watched.

Ответить

the most decent and clear explanation of atomics i've seen so far

Ответить

Would someone please know which talk he is referring to when he says "I'm not going to go into as much details as Paul did"?

Ответить

Great talk. I have my own benchmark but it shows: atomic > mutex > spinlock('>' means faster). Definitely my benchmark doesn't coordinate with his. Any chance I can take a look at the source code of his benchmark?

Ответить

the message I get is "use someone else's lock free queue"

Ответить

What a tough crowd

Ответить

am I the only one who is slightly confused, especially about the memory order part? everyone says this talk is great and all but I'd watched Herb Sutter talk before on atomics and I thought I somewhat understood it and now watched this and now I'm confused

Ответить

Logarithmic conference cppcon.

Thanks.

c++ is beautiful, and this talk is great

Ответить

How fantanstic!

Ответить

"Welcome to the defense of dark art class" 🤣

Ответить

it really helps a lot !!!!

Ответить

Eye opening presentation, very well explained

Ответить

Best video explaining memory ordering ever.

Ответить

future lecture should just invite some CPU architect to explain

Ответить

This was an amazing talk. I’ve generally had a very loose understanding of memory ordering - enough to know I need to stay away from it. I can confidently say I finally understand it thanks to this.

Rarely will I comment on videos. This video was just too good not to. Hands down one of my favorite talks.

Good talk

Ответить

I have listened to some of Fedor’s previous talks on metaprogramming, and this lecture on atomicity in C++ is also nicely explained. Job well done and thank you very much for contributing your experience and knowledge with the community !

Ответить