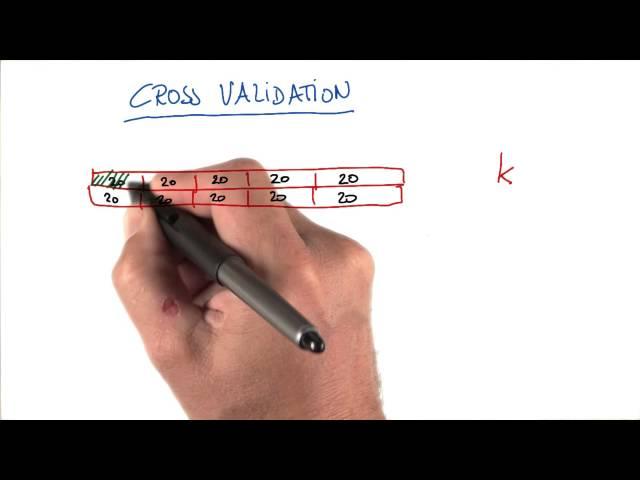

K-Fold Cross Validation - Intro to Machine Learning

Комментарии:

Interesting video. Thanks for sharing.

Ответить

That huge popped blister on his hand is lowkey distracting

Ответить

You miss a part of your skin Sir

Ответить

The voice sounds like Sebastian Thrun. Great guy :)

Ответить

1. Train/Validation 2. Either 3. K-Fold CV

I've seen a lot of answers I disagree with in the comments, so I'll explain. First, the terminology is Train/Validation when used to train the model. The Test set should be taken out prior to doing the Train/Validation split and remain separate throughout training. The Test set will then be used to test the trained model. Second, the answers. 1. Obviously training will take longer doing it 10 times. 2. While training did take longer, you are actually running the same size model in production. All other things being equal the run times of both already trained models should also be equal. 3. The improved accuracy is why you would want to use K-Fold CV.

If I'm wrong, please explain. I'll probably never see your comments, but you could help someone else.

First of all, cover your disgusting-looking wound. Second, explain to people why cross-validation is needed in the first place and who needs it.

Ответить

won't this make the model specialized for the data that we have??

Ответить

can't watch it... the blister is too anoying

Ответить

Can anyone share the answers for those questions please

Ответить

Like because the blister made with a barbell.

Ответить

your pen is a disaster

Ответить

ihhhhh

Ответить

This is incorrect. You should correct this video, as you're encouraging people to mix their train and test sets, which is a cardinal sin of machine learning. Every time you say test set, you should be saying validation set. Test set can only be tested one time, and cannot be used to inform hyperparameters.

Ответить

So is this supervised, unsupervised or semi-supervised algorithm?

Ответить

Why is it so hard to find a simple, concrete, and by hand example of simple k cross validation? All the documentation I can find is very generalized information, but no practical examples anywhere.

Ответить

Here in K fold CV, A model in each fold computes an average result. So entire 10 fold CV is an average of average? What does it mean by 5 times 10 fold cv? How it is different from the normal 10 fold CV? Can someone help me understand this?

Ответить

do a simple practical example by hand, not just theory always. People understand better when there are actual numbers and you go through the entire procedure, even if its a trivial example.

Ответить

Simple and beautiful

Ответить

can't stop looking at the blister on his hand

Ответить

Can anybody provide me the video link which describes the training and test sets by Mrs. Katie ?

Ответить

But this doesn't solve the issue of choosing the bin size, i.e. trade-off between training set and test set (although you are now using all the data for both tasks at some point).

Ответить

Hi bro, thanks for sharing this learning..... ??just a question?? with which application do you make this tutorial? it's amazing... your text came on and above your hand.

Ответить

Thanks, clear

Ответить

I have a small dataset of 48 samples if I have applied MLP using 6-fold, Do I still need validation set to avoid the biased result on the small dataset? Please suggest.

Ответить

Thanks for the video! Quick(silly) Question: in any of those validation methods, every time you change training data, are you going to re-fit the model? If so, every time validating step is respect to different model fit. Then how you determine your final model decision?

Ответить

thank you very much , that video is helpful ..

Ответить

hey guys from ECON704

Ответить

so, what is the difference with test_train_ split with test size=0.1

Ответить

the hand is so annoying

Ответить

what are you even saying? can't understand anything!

Ответить

i know its very old video but still its not necessary to show your hand while writing

Ответить

Nice !

Ответить

Interesting to see that your video presented like this, mind to share how do you present your drawing like this?

Ответить

Great explanation thanks!

Ответить

What I don’t get is: say you’ve picked the 1st bin as your test set for the first run and the rest as your training set. Hasn’t the model learned everything in the training set for the rest of the runs? What’s the point of using all the k’s when they’ve already been used before?

Ответить

do all the 10 folds have to be of the same size? what is the effect if they are of different sizes?

Ответить

k-fold cross validation runs k learning experiences, so at the end you get k different models.... Which one do you chose ?

Ответить

this video is really usefull, thank you very much.

it help me a lot.

The test bin is different every time, so how do you average the results? Can you please provide a detailed explanation on this?

Ответить

It's obvious that the result are : train/test, train/test, and then cross validation.

cross validation run the program "k" times so it's "k" time slower , but one the other hand is more accuracy.

I think the answers are train/test, train/test, and then 10-fold C.V. Also, don't make a video with some nasty open sore on your hand please. Wear a glove or something.

Ответить

what do you mean by data points, you mean instances ?

Ответить