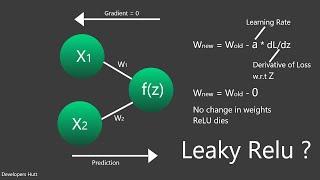

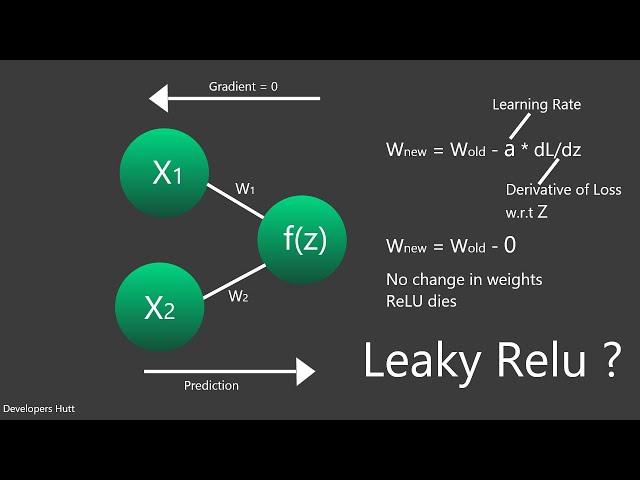

Dying Relu Problem || Leaky Relu || Quick Explained || Developers Hutt

Комментарии:

I can't beleave I finally found you

Ответить

I think when the gradient is zero it will still learn unless the weights are greater than zero but when both weight and the gradient is zero it is a dying relu correct me if i am wrong

Ответить

Short and sweet! Thanks for making this

Ответить

in case of stochastic gradient descent this neuron may die only for one particular record for which sum of weight and input product is negative, hence during back propagation local gradient will be zero which will not let the pass gradient coming from back of the network and its weight will not be updated, so we can say that this neuron is dead. but for next forward pass with new/next record sum of weight and input product could be any value and so this neuron may become active for this particular record hence it will never be dead forever ???

Ответить

👏👏👏

Ответить