Комментарии:

good sound design

Ответить

I would also love to see a video of all the terminologies used together and defined in a single video. Such as Bit, Node, Neuron, Layer, Weight, Deep learning, Entropy, Capacity, Memory etc. I am trying to write them down myself as a little glossary. There meanings are so much greater when they are grouped together.

Ответить

The term Distributed Representation when compared to musical notes makes it seem like it has its own image Resonance or Signature Frequency. As if we really are seeing or feeling the totality of the image of the firing neurons.

We seem to be addicted to understanding perceptions from a Human Point of View, imagine if we could begin to find translations to see them from Animal Point of Views, Different Sensory Combination Point of Views and different combinations of Layered Senses. The potential is infinite.

I like the addition of the Linear Learning Machine versus one that forgets and uses Feelings. It seems that by combining both memory styles you would have more unique potentialities in the flavor pot of experiences, especially when the two interact with each other. Not to mention the infinite different perspectives they would each carry while traveling through time. Small and large epochs of time.

I seem to keep coming back to the Encryption / Decryption videos on how it requires complete Randomness to create strong encryption and how the babies babbling was seemingly random in nature, which begs the question, was it truly random or could we simply just not see the pattern from our limited perspective?

What is the scales and size of the pattern? And what conceptions and perspectives need to merge to simply find the Key to interpreting it?

you are wrong within the first 40 seconds.

Ответить

Amazing <3

Ответить

After 35 seconds, you are already wrong.

We do not think in sentences!!!

Thinking is wordless.

However, we are translating our thinking into words.

But this is not necessary.

The point being, is that languageing is only necessary if we want to communicate to another person.

But thinking comes first, NOT as a result of sentences.

If you get good at meditation and centering yourself, you can drive your car without verbalizing what you are doing.

You can make decisions and act them out without verbalization, internally or externally!

Language is only a descriptor, not the thinking faculty itself!!!

it's all a chain of cause and effect from start to finish, each level or layer sharpens and zero's in on the exact match and is refined until a result is locked in, the human brain compares past results to incoming stimuli, and the result is also linked by chains of associations with the result, like result it's a dog, associations : dogs are fury, playful, dangerous, have a master, wage there tail when happy, and so on, but associations are unique to each separate mind ????

Ответить

Very good content.

Ответить

I want to mention here, I watched the video halfway and I must say, I am a complete noob when it comes to biology but without making things complicated for a person like me, You made it so incredibly clear to me to appreciate how amazing our brain works and generalizes stuff, especially with your example of the short-story (can you please mention that author name, I quite couldn't catch it and cc are not clear either). Thank you for making this content, I'm grateful. Jazakallah hu khayr

Ответить

Eureka!

Ответить

gpt4

Ответить

Absolutely brilliant video!

Ответить

now that's a brilliant explanation of neural networks. better than anything Ive ever seen.

Ответить

holy shit this is some next level explanation

thank you so much!

Just wow. Awesome explanation.

Ответить

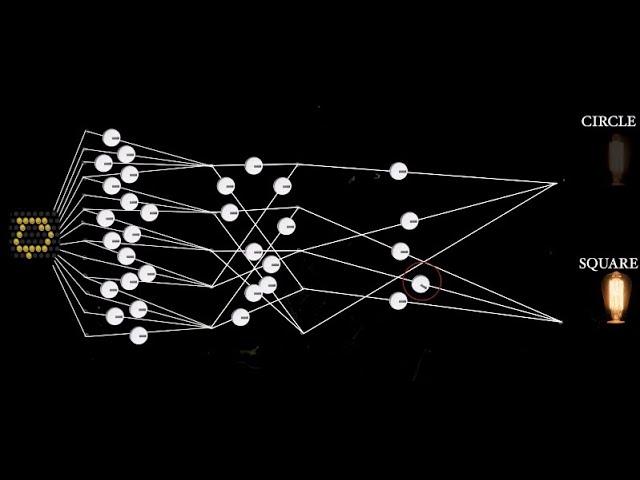

This is a well-done, visual representation of artificial neural networks and how they compare to biological ones. I will say, the only item I might add is that the real reason the "gradual" activation functions mentioned in the latter half of the video are so useful is because they are differentiable. The functions being differentiable are what truly allowed backpropagation to shine, as the chain rule is what allowed the error of a neuron to be determined by the error of the neurons following it, rather than calculating a neuron's error from the output directly each time.

Ответить

Wow this is really really great...you are really good at explaining

Ответить

so adding hidden layers allows a NN to solve more complex problems. How many layers is too many? You are limited by the speed of the computer training the NN. I assume too many layers allow the NN to "memorize" instead of generalizing. Any other limits on the number of hidden layers?

What about the number of neurons/nodes per layer? Is there a relationship between the number of inputs and the number of neurons/nodes in the network?

What about the relationship between the number of rows in your dataset versus the number of columns? As I understand it, the number of rows imposes a limit on the number of columns. Adding rows to your dataset allows you to expand the number of columns too. Do you agree or have a different understanding?

OUTSTANDING VIDEOS!

John D Deatherage

This is majorly bullshit, deliberately trying to be einstein or god,

There's no magic happening, its just simple mathematical intuitive modelling, and speaking of the brain mechanism then nobody in the world knows how it exactly works, we only have theories, unproven.

always looking for new content to watch on this topic.. great channel

Ответить

excellent explained

Ответить

amazing work! thank you

Ответить

Isn't universal approximation theorem the mathematical proof NN can solve and model any problem/function?

Ответить

Great explanation of why we use a smooth activation function

Ответить

Your videos are great at making someone more curious about a subject. They have the right balance of simplification and complexity.

Ответить

As a Medical Student studying Neural anatomy and Physiology , this is a whole new perspective to me !!! Keep teaching us More !!You are the Best teacher :)

Ответить

Love your videos, can you please post videos more often. Thanks, your videos are always worth the wait.

Ответить

a message to the one's responsible for the choices of the background music to translate the mood: "you're pretty good".

P.S. In fact - you are AWESOME.

I just don't understand, why this channel is not popular.

Ответить

Are you going to continue doing videos on cryptography? You're Khan Academy series is amazing!

Ответить

Consensus mechanism?

Ответить

Sir any blockchain related videos in future?

Ответить

Wow.

Thank you so much for this

Brilliant video, as always. The part on the explanation of a deep neural network was really well explained.

Ответить

Link to the Hinton lecture?

Ответить

I’m always very impressed at the novel approach to teaching these subjects - another hit, Brit!

Ответить

Get rid of the background noise

Ответить

This kind of content is a treasure.

Ответить

Beautiful work coming from a beautiful biological neural network about the beauty of artificial neural networks.

Ответить

Anyone knows the name of the Borjes story?

Ответить

I'm not an expert or anything. But I had just been looking at networks. I was interested in the erdos formula: -

erdos number = ln(population size) / ln(average number of friends per person) = degrees of separation

for example it is thought there is something like 6 degrees of separation and an average of 30 friends each person among the global population.

But I was also looking at Pareto distributions as well: -

1 - 1/Pareto index = ln(1 - P^n) / ln[1 - (1 - P)^n], where P relates to population of wealtheist and (1 - P) is the proportion of wealth they have.. for example if 20% of people have 80% of the wealth then P = 0.2 and (1 - P) = 0.8. n = 1 (but can be any number... if n = 3 it gives 1% of people with 50% wealth) and the Pareto Index would be 1.161.

Whether it was a fluke I don't know? I did derive the formula as best I could rather than just guessing. But it seemed as though the following was true: -

1 - 1/Pareto Index = 1/Erdos Number

Meaning that the Pareto Index = ln(population size) / [ln(populationn size) - ln(average number of friends per person)]

Suggesting that the more friends people had on average then the lower the wealth inequality would be. Which I thought was a fascinating idea...

...But it also seemed as though the wealtheist actually had the most 'friends' or 'connections'. So the poorest would have the least connections while the wealthiest would have the most connections - in effect poor people would be channeling their attention toward the wealtheist. Like the top 1% would have an average of around 2,000 connections each (*and a few million dollars) while the poorest would have as little as 1 or 2 connections each (*with just a few thousand dollars... *based on a share of $300 Trillion). Maybe in like a neural network the most dominant parts of the brain could be the most connected parts?

As I say I am not an expert. I was just messing around with it.

This, along with everything else on this channel, is fantastic material for schools. I hope it gets noticed by teachers

Ответить

Yay!!! My favourite channel finally uploads again.. to be honest the quality of your videos makes the wait worth it

Ответить

Great video! Do you have any idea how these types of Neural Networks would respond to visual illusions? I'm writing my thesis about Neural Networks and biological plausibility and realized that there seems to be a disconnect between human perception and the processing of Neural Networks. Either way, incredibly informative.

Ответить

This might be more of a tangent to your great video, but my understanding is that intuition and logic aren't really distinct things. The former is merely more hidden in deep webs of logic while the latter is the surface or what is most obvious and intuitive. Ah, see the paradox? It's a false dichotomy resulting from awkward folk terms and their common definitions.

I was always like the teacher's pet in college courses of logic and symbolic reasoning while majoring in philosophy maybe partly because anything that was labeled "counter-intuitive" was just something I would never accept until I could make it intuitive for me via study and understanding. But putting me and my possible ego aside, look at the example of a great mathematician such as Ramanujan and how he described his own process of doing math while in dream-like states. His gift of logic was indeed his gift in intuition, or vice versa, depending on your definitions.