Normality assumption

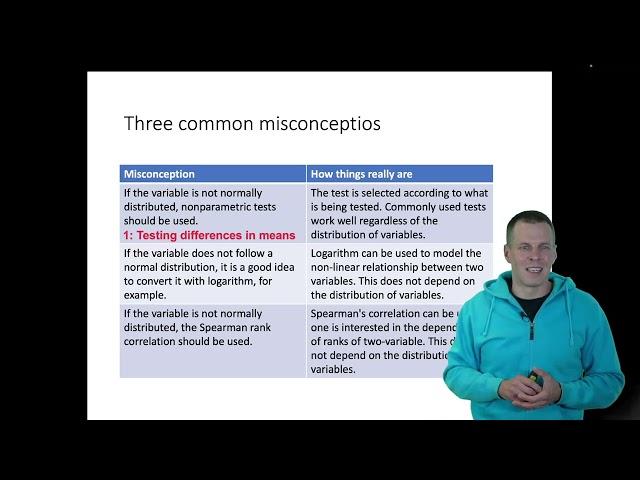

I have often been asked to explain the normal distribution assumption to students working on master theses. The normal distribution assumption is widely misunderstood, and its significance is often taught incorrectly.

When comparing averages, the normal distribution has no relevance to the validity of the test. The non-normality of a variable also doesn't mean that the variable should be transformed with a logarithm, for example, or that rank correlation should be used instead of regular correlation.

The normal distribution assumption is needed to mathematically derive some statistical tests regardless of sample size. However, in large samples, the same tests can be derived without this assumption. This means that if the sample size is large enough, the normal distribution assumption doesn't need to be considered. In practice, "large enough" is probably around 10 observations.

Variables also don't need to be transformed due to their non-normality. Transformations are useful if we want to describe the relationship between two variables with an exponential curve instead of a straight line, for example. The need for transformation is apparent from a scatter plot of two variables and doesn't really depend on the distributions of the variables. The same applies to the use of rank correlation.

Link to presentation slides: https://osf.io/j6yz7

Examination of regression assumptions with simulations for graduate students: https://osf.io/x8gvp

When comparing averages, the normal distribution has no relevance to the validity of the test. The non-normality of a variable also doesn't mean that the variable should be transformed with a logarithm, for example, or that rank correlation should be used instead of regular correlation.

The normal distribution assumption is needed to mathematically derive some statistical tests regardless of sample size. However, in large samples, the same tests can be derived without this assumption. This means that if the sample size is large enough, the normal distribution assumption doesn't need to be considered. In practice, "large enough" is probably around 10 observations.

Variables also don't need to be transformed due to their non-normality. Transformations are useful if we want to describe the relationship between two variables with an exponential curve instead of a straight line, for example. The need for transformation is apparent from a scatter plot of two variables and doesn't really depend on the distributions of the variables. The same applies to the use of rank correlation.

Link to presentation slides: https://osf.io/j6yz7

Examination of regression assumptions with simulations for graduate students: https://osf.io/x8gvp

Комментарии:

Normality assumption

Mikko Rönkkö

Klasky csupo g major 38

Nononoyrc

Педагог по саксофону Школы музыки Си-Ля

Школа Музыки Си-Ля

Best clips | Rebel AMR

Rebel AMR

Где и как ловить щуку на р.Вьюнок в Русской рыбалке 4. Ремейк.

Степанова Хижина

魔性广场舞,开心快乐,the best shuffle dance you want to watch.

Happy Dancer