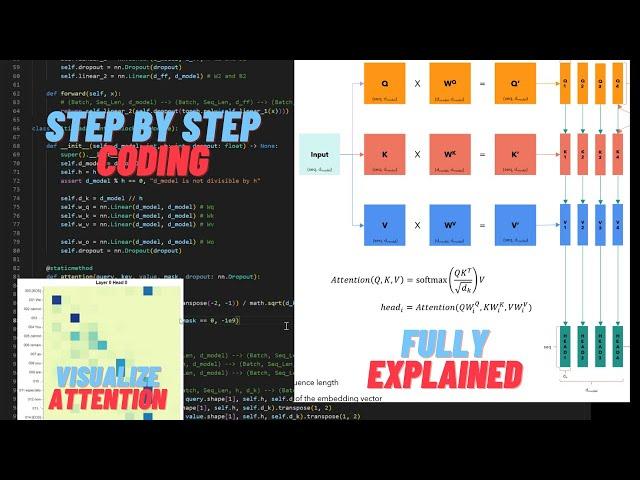

Coding a Transformer from scratch on PyTorch, with full explanation, training and inference.

Комментарии:

The PROBLEM With Capital One Cards…

The RD Guarantee

3 Points to Improve Men Strike in Kendo

Kendo Guide (Hiro)

EU4 1.35 Гайд на БУРГУНДИЮ - Объединяй и властвуй!

Skol_The_Game(STG)