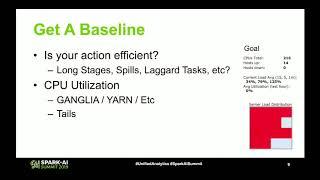

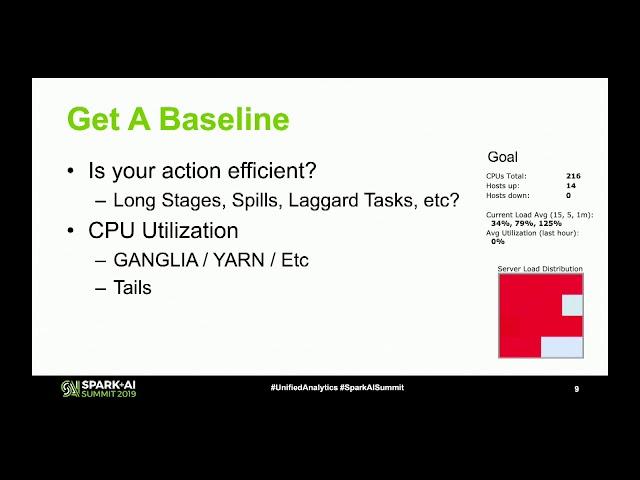

Apache Spark Core—Deep Dive—Proper Optimization Daniel Tomes Databricks

Комментарии:

ЧТО СЛУЧИЛОСЬ С НАТУРАЛОМ АЛЬБЕРТОВИЧЕМ ?

Натурал Альбертович

колёсики-колёсики и красивый руль

лютый кот кто-то

Mastürbasyon hakkında doğru bilinen yanlışlar

Seçil Günay Avcı

How to cut and split a video for free in VSDC 6.3.8 (basics explained)

VSDC Free Video Editor

Campuran Tik Tok Viral 2023 Full Bass || Dj Tik Tok Terbaru

Dj Nasywa team