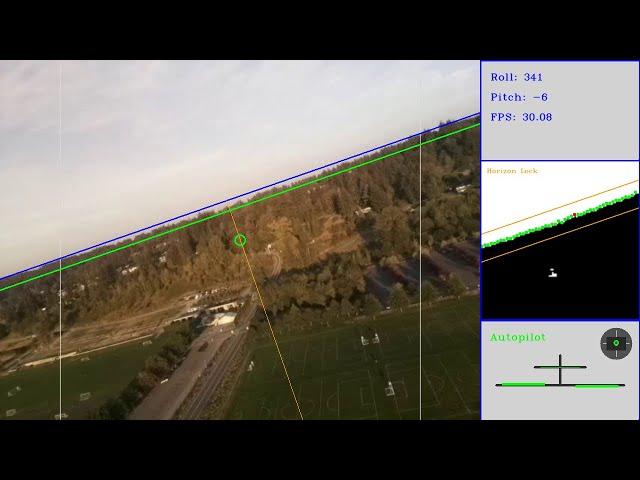

Autonomous Flight with a Camera: Horizon Detection Algorithm

Комментарии:

Can you please show us your electronics set up?

Ответить

Super cool! Thank you for sharing!!

Ответить

And where exactly is he flying? What is the ultimate goal and who assigns it?

Ответить

Great presentation, thanks for sharing.

Just out of curiosity, does findContours() really have a different return values for windows/linux?

Great code review, very clear and concise.

I think segmentation would definitely give you better perception outputs, but would depend on how quickly you could perform inference. What HZ do you get out of your control loop right now?

Depending on that, you might be able to get away with using a USB compute stick attached to the raspberry pi to augment processing power.

Great work!

Ответить

great work

Ответить

Ok, what about night? I assume that the algorithm not will be working fine, because of camera with night mode return back images in different colours.

Ответить

One thing that you could easily play with is how you do the rgb-to-greyscale conversion. There's a reason why we say "the sky is blue", so taking the greyscale value as the usual 33%red+33%green+33%blue seems to not be the smartest way to go about this.

What makes this complicated is that 0%red+0%green+100%blue might not be optimal either, especially when there's blue lakes in the picture. Since lakes will also have some blue tone, but a less overexposed kind of blue.

Maybe you can compare the color of your sky with the color of the most problematic (brightest) patches of ground. (Which I define as any bright areas on the "ground" side of your horizon line.)

Step A: Take the average sky color minus the average problem patch color (And since the sky is the sky, you can probably get away with sampling only every 10x10 pixels of your downscaled image).

Step B: Normalize the resulting RGB space vector - by which I mean to a sum of 1, not an euclidian length of 1. (Problems can occur when the problem patches are brighter than the sky itself, see the first fix idea below.)

Step C: Use those numbers as the mixing ratio for the greyscale conversion.

You'll probably need to come up with a way to obtain a representative sample of problem patches, despite them appearing (by design) only rarely.

My first idea would be to not use the same threshold as for your actual horizon detection, but 90% or so of it (to pick up on darker patches that COULD become problematic with a slightly changed sky color.) And my second idea would be to keep a record of past patch colors across several seconds of footage, and average over those.

wow , we did it with ardupilot 10 years ago :) but cool work anyway

Ответить

Cool stuff!

What happens in a steep dive, when the sky is out of view?

This is awesome

Ответить

really cool - thank you for walking through the code here. awesome work

Ответить

How did you perform testing of the code and the airplane in general? Did you use simulation software to model the plane and test the code?

Ответить

Was flying the clubs' C172 one day and the turn coordinator ball was way the hell out the side, very odd.. I did everything in my power to center it, but my head wouldn't let me, so I went instrument only for ten seconds and the ball magically centered. Eyes outside again, and as if by some black magic the damn ball headed off to the side again! What was it? A cloud on the horizon was on an angle.... You will need some checks on your algorithm - I did on mine.

Ответить

Very informative.❤

Ответить

Interesting approach to this problem. There are some downsides to using visuals for such a vital system. Is this just meant as a proof of concept, because for production a simple IMU would probably be faster and less complex on the software side. But nevertheless, great video 👍

Ответить

Is there another video in this series coming?

Ответить

What kind of hardware did you use? Camera type and connections? Does this mean that you used RP Camera? Thank you.

Ответить

Great video. Is there a reason or an advantage to using cameras for this instead of an IMU?

Ответить

Welcome.

Ответить

![Ratchet and Clank Rift Apart [4K PS5] #7 Прохождение Без Комментариев На Русском Игрофильм Ratchet and Clank Rift Apart [4K PS5] #7 Прохождение Без Комментариев На Русском Игрофильм](https://invideo.cc/img/upload/a1YwYXZ4R3UxN3U.jpg)

![PHILOSOPHY - Ethics: Killing Animals for Food [HD] PHILOSOPHY - Ethics: Killing Animals for Food [HD]](https://invideo.cc/img/upload/ZzdPWVpfa01BSDM.jpg)