Alberto Bacchelli: How code review works (and doesn't) in the real world

---

On April 27, 2022, It Will Never Work in Theory ran its first live event: lightning talks from leading software engineering researchers presenting immediate, actionable results from their work. Our audience learned:

- powerful new ways to test modern software

- how to do better, smarter code reviews,

- what effective remote onboarding means during the pandemic,

- whether test-driven development actually makes you more productive,

- and what "productive" really means for programmers.

Their slides, and over 250 reviews of software engineering research papers, are all available on https://neverworkintheory.org.

We are grateful to Strange Loop, Mozilla, and Taylor & Francis for their support, and we hope you'll join us at Strange Loop 2022 in September for more insights.

Комментарии:

Excellent talk! Direct, simple, short, and informative.

Ответить

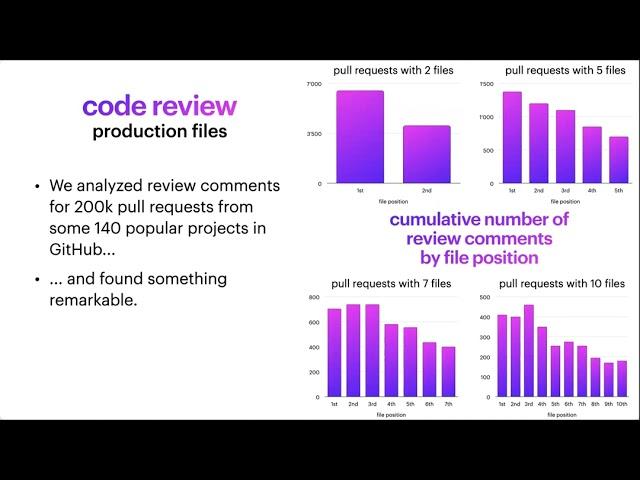

Could you please publish the stats/raw data of your research? The stuff you present seems to be a bit misleading, I think.

Which order should be the "proper one" in order to maximize the number of issues found overall? Should we randomize the order of the files each time?

175% increase - is it like "4 people noticed the issue vs 7 people noticed the issue with the pool of people of 40"? How confident, in terms of probability, your experiment is? I understand that there is indeed some attention span involved, but I would imagine there is some "small numbers" fallacy here somewhere regarding your research.

Regarding the tooling support - can't quite grasp on how exactly would you support such feature (reordering of the files) - there is literally no source where we can get a slightest idea of which file is important or even what was the order in which files were edited - it's all in a single commit... I would imagine you could do something if you have a branch with multiple commits where each file is edited separately - which is a bit of idiocrasy. For example, I use TDD when I can, and I do other ways when I can't use TDD, but in any way, there is almost no way when I can commit something which leaves the project broken - that is separate files committing is not an option, changes and tests will be in the same merge request, most probably in the same commit... Also, it is quite popular to squash commits (although I'm not a fan of it). So... why and how?

May we get sample sizes and actual likelihoods per group, rather than a single relative percentage? E.g., if you had 100 participants and 1 person found the bug in group A, and 2 people in group B, you can say the changes doubled, but that would hardly be convincing.

Ответить

This drives home to me that you should always build a narrative around the code. And how you need to direct the reviewer as to how to read through code submission. Where to start and where to go next, which might not be the next code diff.

This is possible to some extent on github, through a top level comment, you could tell them the file order to consider.. But within those files your forced to consider comments from the top of the file down.

But how much better could it be? We should be able to change the presentation layer of the code diffs more.

And yes, the tests files should likely come first, because they should demonstrate the functionality.

Really great to see more statistically derived based recommendations. Would love to see more of this kind of work especially in the format presented.

Interesting findings, but it seems to boil down to "reviewers are less attentive at the end of a review than at the start". The problem with bugs is that they can be significant regardless of which file they're in, and there's no automated way to know in advance which files are most likely to contain bugs. It seems to me like the solution is to do smaller, more frequent reviews with fewer files in each review, but there are practical limits to how small a change can be while still being meaningful.

Ответить

Very interesting and reflects my personal experience. I try not to read sequentially if I can, and dart between.

One other way you can try to combat these effects, in my experience, is to do reviews commit-by-commit. That way, the changes to keep in mind are smaller, the natural story telling effect highlights inconsistencies, and it tends to more tightly group production code with test code.

Do you plan to test for this effect in the future?