Talk to Your Documents, Powered by Llama-Index

Комментарии:

what about security of our data ? what if it's confidential document ? thanks for your excellent videos

Ответить

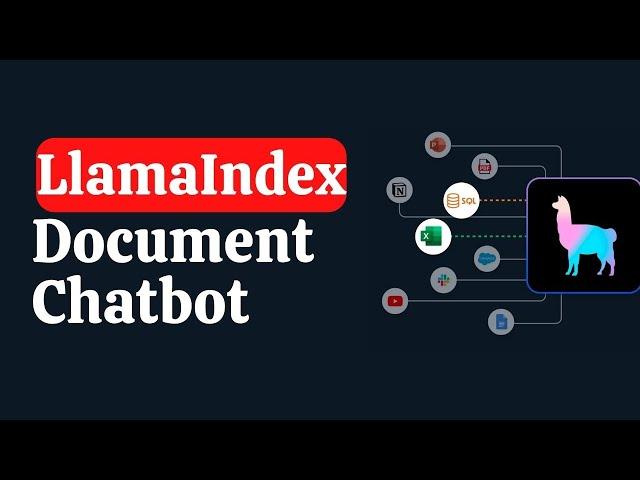

This is very helpful. Where can we find the system architecture diagram?

Ответить

Awesome ❤ a little off topic question......would be so kind as to share the app you are using for making diagrams. it's sick....I've been looking for something that that since quite a while now, but with no luck.... 🥺

Ответить

Thanks for the video. I’m facing lots of latency issues (15+ min) while reading the stores the index. How I can improve ? There are 100k+ vectors . Going ahead with numpy array takes few minutes only !

Ответить

Another excellent video. Easy to follow and up to date. Thank you and keep it up!

Ответить

Will you be testing the new Mistral-7B-v0.1 and Mistral-7B-Instruct-v0.1 LLMs? They claim to outperform Llama 2.😊

Ответить

Excellent video. Do you know what is the best option to start the code in an interface? I passed the code to Vs Code and then started it in Streamlit but it gives me some problems. I appreciate your help

Ответить

Great video, how can I have the ability to compare 100s of document using llamaindex and will it know which chunk belongs to which document when answering the question? Also, how do you make sure all the pieces that should be in 1 chunk stays together, for instance if there is a table that goes across 2 pages then that should still be in 1 chunk?

Ответить

Excellent videos! Really helping out with my work. Curious what tool you are using to draw the system architecture? I really like the way it renders the architectures.

Ответить

Much more intuitive than LangChain

Ответить

Nice intro about llma-index👍. I think for small amount of documents the default llma-index embedding in json is sufficient. I suppose u can also use chromadb or weaviate or other vectorstores. Would be nice to see with the non default vector store...

Ответить

I liked your explanation. You are a good story teller. You explained the details in a simple way and yet easy to implement manner. Thank you. I look forward to your next video.

But how do we ensure the latest data is fed to the LLM in real time? In this case, we need to provide the data to the Llama. And the response is limited to the data provided.

If I'm uusing weaviate, how to load then?

Ответить

what's the difference in using Llamaindex and just using openai embeddings?

Ответить

I tried this in my colab pro account and the session crashed when I ran the vectorstore.

Out of GPU memory. colab allocated 16GB of VRAM.

Would you please add option for using huggingface hosted LLMs through their free inference API (applies to select models)?

Thanks for a great video.

could you do a tutorial about how to do this locally?

I'm very interested in llama index, but I'm wary of using things that aren't on my local hardware

learn so much from you!

Ответить

Is it better to use Llama Index or RAG (Retrieval Augmented Generation) ?

Ответить

I don't have any credit card, but I will buy an coffee for you some day (maybe in person, who knows :)

Ответить

is it a Production ready code ? What important points we should keep in mind to make a similar app for Production environment ?

Ответить

how does llama index compare with the localgpt method?

Ответить

Perfect pace and level of knowledge. Loved the video.

Ответить

Hi, can i get your pipeline draw link please

Ответить

to be honest this is the best tutorial i see in 2023

Ответить

Amazing! I haven't seen enough videos talking about persisting the index especially in beginner level tutorials. I think its such a crucial concept that I found out much later. Love the flow for this and its perfectly explained! Liked and subbed!

Ответить

can you make a video on how to create a website chatbot out of all of this? say, we used this video and made a chatbot to talk with our data, how do we use it in our website?

Ответить

Do u have any example of a model on personal desktop/server. I dont wish to publish my content to chatgpt or any internet service.

Ответить

Excellent. Is there a video you are planning to make on a multi modal RAG? I have a PDF which is an instruction manual. It has text and images. When a user asks a question, for example, "How to connect the TV to external speakers?", it should show the steps and the images associated with those steps. Everywhere I see are examples of image "generation". I don't want to generate images. I just want to show what's in the PDF based on the question.

Ответить

Hi bro cool video! May I ask if there is a way to store quantized model with LlamaIndex? It's very painful to quantize it every single time I try to run it

Ответить

hi. googlecolab link give error

Ответить

Great video. The notebook fails at the first hurdle for me: ERROR: pip's dependency resolver does not currently take into account all the packages that are installed. This behaviour is the source of the following dependency conflicts.

llmx 0.0.15a0 requires cohere, which is not installed.

tensorflow-probability 0.22.0 requires typing-extensions<4.6.0, but you have typing-extensions 4.9.0 which is incompatible.

ValueError: The current `device_map` had weights offloaded to the disk. Please provide an `offload_folder` for them. Alternatively, make sure you have `safetensors` installed if the model you are using offers the weights in this format.

Getting this error

Do you have codes to do the same without openAI. Using some model in huggingface?

Ответить

I am in the vectorstoreindex.from documents cell. it been running for like 24 hrs now. How do I know when it will end. I am running it locally in my laptop. output shows completion of batches. almost 2400+ batches. but it doesn;t showing how many are left. can somebody help?. my data consist of 850+ json. over all 70MB data.

Ответить

im doing the same, but indexing every node created, there are around 5000 nodes, and its taking a long time. is there some progress bar (like tqdm) code i can add to see how long the indexing process would take?

Ответить

Hello, help with that please. When I execute the line 'index = VectorStoreIndex.from_documents(documents)' after 1 min I get an error 429 (insufficient_quota). Check if the OPENAI_API_KEY variable was registered with '!export -p', and if it is. Thanks

Ответить

Can I build a multilingual chatbot using llama index?

Ответить

Thanks for the clear explanation. Could you please share the name of the tool you used to create the workflow diagram?

Ответить

Great explanation

Ответить

Finally a good tutorial on the subject! Thanks so much!

Ответить

Hi prompt as you mentioned in this video that this is a system prompt for StableLM, I want to know is there a way I can find prompt format for different LLM for example mixtral 8x7b/22b or llama 3

Ответить

Clear and concise explanations

Ответить

Nice video! Dependency conflicts are legion with both LangChain and LlamaIndex. They have rushed tools to production but sotware quality has been sacrificed for speed. That trend is here to stay - we spend more time resolving dependency conflicts than coding our apps. Your videos are very good.

Ответить

Can you show how to do it with free and local llms

Ответить

Hello! Nice intro and diagram, quite ok intro do concepts. Unfortunately number of stuff is already deprecated, obsolete, changed in API with breaking way, so no chance to use the colab without digging or looking at current api contexts :(

Ответить