PySpark AWS Glue ETL Job to Transform and Load data from Amazon S3 Bucket to DynamoDB | Spark ETL

===================================================================

1. SUBSCRIBE FOR MORE LEARNING :

https://www.youtube.com/channel/UCv9MUffHWyo2GgLIDLVu0KQ

===================================================================

2. CLOUD QUICK LABS - CHANNEL MEMBERSHIP FOR MORE BENEFITS :

https://www.youtube.com/channel/UCv9MUffHWyo2GgLIDLVu0KQ/join

===================================================================

3. BUY ME A COFFEE AS A TOKEN OF APPRECIATION :

https://www.buymeacoffee.com/cloudquicklabs

===================================================================

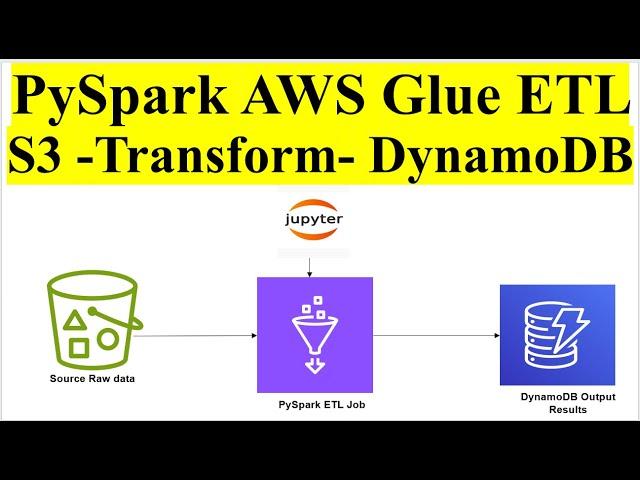

In this comprehensive tutorial, we delve into the process of building an efficient ETL (Extract, Transform, Load) pipeline using PySpark within the AWS Glue environment. This tutorial is designed for data engineers, data scientists, and anyone interested in leveraging AWS services for data processing and management.

The video begins by outlining the architecture of the ETL pipeline, highlighting the key components involved, including Amazon S3 for storage, AWS Glue for data transformation, and DynamoDB for database storage.

The presenter then walks through the setup process, guiding viewers step-by-step on how to configure AWS services, set up permissions, and create necessary resources such as S3 buckets and DynamoDB tables.

Next, attention shifts to the core of the tutorial: writing PySpark scripts to perform data transformation tasks. The presenter demonstrates how to use PySpark DataFrame APIs to read data from S3, apply various transformations such as filtering, aggregation, and data cleansing, and finally prepare the transformed data for loading into DynamoDB.

Throughout the tutorial, best practices and optimization techniques are emphasized to ensure scalability, efficiency, and cost-effectiveness of the ETL process. Topics such as partitioning, data type optimization, and parallel processing are covered in detail, providing viewers with valuable insights into maximizing the performance of their ETL jobs.

Once the data transformation phase is complete, the video transitions to the final stage of the pipeline: loading the transformed data into DynamoDB. Viewers are guided through the process of using AWS Glue DynamicFrames to write data directly to DynamoDB tables, leveraging the efficient and scalable nature of DynamoDB for storing and querying the transformed data.

The tutorial concludes with a comprehensive overview of the entire ETL pipeline, summarizing the key steps and highlighting important considerations for monitoring, troubleshooting, and optimizing the pipeline for real-world use cases.

By the end of this tutorial, viewers will have gained a solid understanding of how to leverage PySpark, AWS Glue, and DynamoDB to build robust and scalable ETL pipelines for processing and analyzing data stored in Amazon S3. Whether you're a seasoned data engineer or just starting your journey with AWS data services, this tutorial will equip you with the knowledge and skills to tackle complex data transformation challenges with confidence.

Repo link : https://github.com/RekhuGopal/PythonHacks/tree/main/AWS_ETL_Job_In_Notebook

#pyspark #aws #glue #etl #datalake #dataengineering #awscloud #s3 #dynamodb #bigdata #dataanalytics #dataprocessing #datatransformation #awsarchitecture #cloudcomputing #awsdeveloper #pythonprogramming #awsdata #awsdatalake #awsdynamodb #awsglue #awsanalytics #awsbigdata #dataloading #dataintegration #awsdataengineering #cloudetl #s3storage #cloudquicklabs

1. SUBSCRIBE FOR MORE LEARNING :

https://www.youtube.com/channel/UCv9MUffHWyo2GgLIDLVu0KQ

===================================================================

2. CLOUD QUICK LABS - CHANNEL MEMBERSHIP FOR MORE BENEFITS :

https://www.youtube.com/channel/UCv9MUffHWyo2GgLIDLVu0KQ/join

===================================================================

3. BUY ME A COFFEE AS A TOKEN OF APPRECIATION :

https://www.buymeacoffee.com/cloudquicklabs

===================================================================

In this comprehensive tutorial, we delve into the process of building an efficient ETL (Extract, Transform, Load) pipeline using PySpark within the AWS Glue environment. This tutorial is designed for data engineers, data scientists, and anyone interested in leveraging AWS services for data processing and management.

The video begins by outlining the architecture of the ETL pipeline, highlighting the key components involved, including Amazon S3 for storage, AWS Glue for data transformation, and DynamoDB for database storage.

The presenter then walks through the setup process, guiding viewers step-by-step on how to configure AWS services, set up permissions, and create necessary resources such as S3 buckets and DynamoDB tables.

Next, attention shifts to the core of the tutorial: writing PySpark scripts to perform data transformation tasks. The presenter demonstrates how to use PySpark DataFrame APIs to read data from S3, apply various transformations such as filtering, aggregation, and data cleansing, and finally prepare the transformed data for loading into DynamoDB.

Throughout the tutorial, best practices and optimization techniques are emphasized to ensure scalability, efficiency, and cost-effectiveness of the ETL process. Topics such as partitioning, data type optimization, and parallel processing are covered in detail, providing viewers with valuable insights into maximizing the performance of their ETL jobs.

Once the data transformation phase is complete, the video transitions to the final stage of the pipeline: loading the transformed data into DynamoDB. Viewers are guided through the process of using AWS Glue DynamicFrames to write data directly to DynamoDB tables, leveraging the efficient and scalable nature of DynamoDB for storing and querying the transformed data.

The tutorial concludes with a comprehensive overview of the entire ETL pipeline, summarizing the key steps and highlighting important considerations for monitoring, troubleshooting, and optimizing the pipeline for real-world use cases.

By the end of this tutorial, viewers will have gained a solid understanding of how to leverage PySpark, AWS Glue, and DynamoDB to build robust and scalable ETL pipelines for processing and analyzing data stored in Amazon S3. Whether you're a seasoned data engineer or just starting your journey with AWS data services, this tutorial will equip you with the knowledge and skills to tackle complex data transformation challenges with confidence.

Repo link : https://github.com/RekhuGopal/PythonHacks/tree/main/AWS_ETL_Job_In_Notebook

#pyspark #aws #glue #etl #datalake #dataengineering #awscloud #s3 #dynamodb #bigdata #dataanalytics #dataprocessing #datatransformation #awsarchitecture #cloudcomputing #awsdeveloper #pythonprogramming #awsdata #awsdatalake #awsdynamodb #awsglue #awsanalytics #awsbigdata #dataloading #dataintegration #awsdataengineering #cloudetl #s3storage #cloudquicklabs

Тэги:

##cloudquicklabsКомментарии:

ZTE 5G PAD & iMacro

ZTE Corporation

Bintang Kecil Di Langit | CoComelon Bahasa Indonesia - Lagu Anak Anak | Nursery Rhymes

CoComelon Bahasa Indonesia - Lagu Anak Anak

The Art of Creating Space: The Why

Human Design With Ebony

Abafa o caso! Tem que esconder isso da #igreja PASTOR Marcos Feliciano

ERIC RIBEIRO EMLGDP

Annihilate (Spider-Man: Across the Spider-Verse)

Metro Boomin

3 Minute Friday: Anchorpoint? No Sir :)

Armin Hirmer