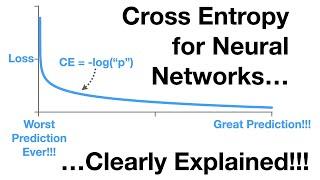

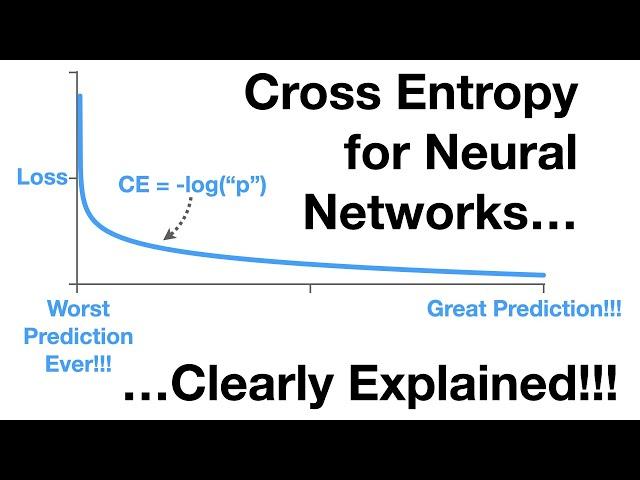

Neural Networks Part 6: Cross Entropy

Комментарии:

you rock josh!!

Ответить

Thank you, this makes sense now.

Ответить

Great explanation in an entertaining way. Bam!

Ответить

As you said in the current video, if the cross-entropy function helps us more in the gradient descent process than what Sum of squared function does, why don't we use the same cross-entropy for the optimization of linear models such as Linear regression also.. why we use SS there and not entropy ? Thank you for the wonderful videos to understand the math and functions ..

Ответить

I'm in a stats phd program and we had a guest speaker last week. During the lunch time the speaker asked us which course we liked most in our school, one of my classmates said actually no, he likes statquest "course" the most. And I was like nodding my head 100 times per minute. We discussed like why US universities hire professors good at research but not hire professors good at teaching, why there is no tenure-tracked teaching position......US education system really needs to change

Ответить

I am currently starting my bachelor's thesis on particle physics and I was told that a big part of it consists in running a neural network with pytorch. Your videos are really really useful and thanks to you I have at least have a vague idea on how a NN works. Looking forward to watch the rest of your Neural Networks videos!! TRIPLE BAM!!

Ответить

I would not be able to get how neural networks fundamentally work without this series. Thank you so much Josh! Amazing and clear explainations!

Ответить

very very intuitive and very great explanation

Ответить

What is this guy made of??? what does he eat??? Are you a God?? An alien?? You are so smart and dope man!!! How do you do all this? He should be a lecturer at MIT! SO underrated content💞💞💞💞💞💞

Ответить

bam

Ответить

why observed probability is 1 in case of setosa , kind of confusing still ?

Ответить

still trying to wrap my head around how is this related to entropy if entropy is the expected surprise, it's like we are using a different distribution for the surprise and the expected value

Ответить

I just can’t believe how you opened my eyes. How can you be so awesome 👌👌. Sharing this knowledge for free is amazing.

Ответить

Thank you for this video! It and others helped me pass my exam! :D

Ответить

thank you so much for your explanation!

Ответить

Hey Josh, I saw one of your videos about entropy in general - which is a way to measure uncertainty or surprise. Regarding Cross Entropy, the idea is the same - but now it's for the SoftMax outputs for the predicted Neural Network values?

Ответить

💚

Ответить

Wow , I feel when I say thank you it's nothing in compare with what you do ! Very impressive❤❤

Ответить

really good explanation .Difference of squared error vs cross entropy is very well explained .

Ответить

Argmax and Softmax are using only with classification? Or could use them also with regression?

And same question for cross entropy is used for classification only?

Thank you

Just wow, thumbs up, great explanation sir

Ответить

🎉

Ответить

Ok but why do we need to calculate cross-entropy?

Ответить

Your video makes my mind triple BAM!!

Ответить

Why not saying that SSR is more adapted for regression while cross entropy fits better for classification?

Awesome video though!

What an amazing video. Never found any content or video better than this one anywhere on this topic. Thank you so much.

Ответить

It's amazing !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

Ответить

Gold!

Ответить

Thank you so much! Your videos are always very clear and easy to follow :)

Ответить

Hey Josh!

the way you teach is incredible. THANKS A LOT!❤

I was predetermined that I would need to watch several videos to grasp this concept. OMG!! You have explained it so intuitively. Thanks a lot for saving my time and energy.

Ответить

😍😍😍😍😍😍

Ответить

Thank you. Another complicated topic made simple. !!!!

Ответить

This is a amazing video

Ответить

but in a Regression problem we still using SSR right ?

so what will happen if we still using SSR in a Classification problem and after the backpropagation ends his work Check for the maximum output ? is that because if we have an output = 1.64 and the observed = 1 it also tends to decrease the distance so we needed to invent a function to control what is the min and maximum value ? in our case 0 and 1

Really well explained! Thanks Josh :)

Ответить

The hero we wanted, and the hero we needed, StatQuest...

Ответить

MY Friend! I'm on part 6. How can I learn the differences between LSTM and Bi-LTSM and Recursive Neural Network?

Ответить

I love this this way of learning!

Ответить

amazing work, can't wait to start on the book once I finish all your videos

Ответить

Hello Josh!

I have to say WOW!! I love every single of your videos!! They are so educational. I recently started studying ML for my master's degree and from the moment I found your channel ALL my questions that I wonder get answered! Also, I noticed that u reply to every post in the comment section. I am astonished.. no words. A true professor!

Thanks for everything! Thank you for being a wonderful teacher.

Love each and every video by StatQuest. Thank you Josh and team for providing such clear, easy-to-digest concepts with a bonus of fun and entertainment. Quadruple BAM!!!

Ответить

Kudos!!!! 🙌🏻 BAM!!!!!

Ответить

This is great! Thank you!!!

Ответить