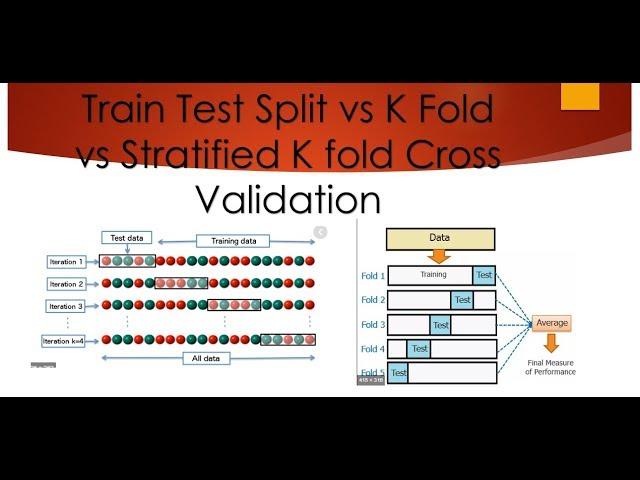

Train Test Split vs K Fold vs Stratified K fold Cross Validation

Комментарии:

I would be best if you had provided link of the dataset for the practice and confirmation.

Thanks.

Hello very nice video, but ONE QUESTION, what is the train/ test ratio in every iteration when you use stratiified k cross validation. I mean somehow combining stratified k fold cross validation with train test split

Ответить

This video could have been better.

Ответить

You are a saviour!

Ответить

We are living in a wonderful universe

Ответить

Krish, In K fold validation, You fitted the classifier on diff sets of X train and Y train and got the different accuracies. This is fine to evaluate the model but you didn't mention on which data we need to train the model if we want to evaluate the performance using k fold. Are we going to train our classifier on full data i.e. X and Y? Final model which we want to use later on should be trained on full dataset?

Ответить

Hello Krish sir...What if need to perform customize prediction. I do need to perform classifier. predict(test). But in my code it shows me feature name missing. I'm using the Pima Diabetes dataset in kaggle

Ответить

how about precision, recall and f-measure?

Ответить

I love your content, it is very helpful. You are a treasure. But this video would have been loads better if you slowly allowed students to copy over the code.

Ответить

Sir plz upload github link also

Ответить

is this video notebook available in gitbut @krish

Ответить

so clear explanation

thanks

How can I find roc curve and confusion matrix from this project in all Train Test Split vs K Fold vs Stratified K fold Cross Validation please give us a video of this.

Ответить

Y.iloc[number] is not working. Error is

AttributeError: 'numpy.ndarray' object has no attribute 'iloc'

great video, thank you!

Ответить

I've been wondering for this topic for a while, very happy to find your content!!!

Ответить

Thanks for very clear explanation Krish..can u pls share github link also

Ответить

higher bias does not necessarily mean a good accuracy, best one is low variance and low bias

Ответить

with stratified k-fold the only difference is that the classes of type Yes and No are also considered when choosing the test size and the rest of it is the same as k fold cross validation is that so

Ответить

By selecting n_splits as 4, i got highest accyracy in the 4th ie the last fold.. any idea on how to extract the exact dataset fed to train test so that i can replicate the output of the 4th split???

Ответить

How to get confusion matrix and auc roc curve after k fold varification?

Ответить

How to get confusion matrix after cross validation

Ответить

where can i get the dataset set 🙏

Ответить

May I have ur mail-id sir

Ответить

Hello sir can you share your Jupyter notebook, please.

Ответить

cross value is coming what does it mean

Ответить

Thank you for teaching can I get a link to the notebook.

Ответить

wow this is very enlightening!!!! thank you sir! one question tho. what if we need to have the confusion matrix? I am using Repeated Stratified K-Fold, and im curious on how to obtain a reasonable and easy to execute confusion matrix. Any suggestion on this?

Ответить

Isn't stratified validation by default included in cross_val_score library..

Ответить

Hi Kris,

When we use cross_val_score and give the paramter to cv as int which tells about number of folds and if the model we are using is classification it chooses stratified by default right and not k-Fold type of cross validation. I found this in the sklearn library

cv: int, cross-validation generator or an iterable, default=None

Determines the cross-validation splitting strategy. Possible inputs for cv are:

1)None, to use the default 5-fold cross validation,

2)int, to specify the number of folds in a (Stratified)KFold,

3)CV splitter,

4)An iterable yielding (train, test) splits as arrays of indices.

For int/None inputs, if the estimator is a classifier and y is either binary or multiclass, StratifiedKFold is used. In all other cases, KFold is used.

This is just a query. Please let me know if my understanding is wrong.

Thank you! Keep going with other vids tutorial!!

Ответить

If i have 1000 rows in dataset. Then how can select first 200 rows for testing and last 800 rows for training instead of select randomly in splitting?

Ответить

Hello Krish

I have subscribed to your 799 plan, please let me know how i can add myself to your whatsapp group

Sir your content is great, thanks for uploading such important and informational videos. These videos are very helpful. Keep making these, more power to you. <3

Ответить

So my understanding is with cross-validation we can look up what is the achievable score however it lacks interpretability likeability to make confusion matrix out of it.

Ответить

thanks man

Ответить

Gud sir, I like your videos very much, sir I have a question that, in K fold validation, after score value getting how can i make confusion matrix sir....?

Ответить

Namaskar Krish Ji! Great video, well done. The question I have is regarding the imbalanced dataset and StratifiedKFold validation. Taking your example of Churn, lets say your churn rate is 1% which means in total 50k observations your churners are 500. Now, because your data is high imbalanced with very rare events, suppose you want to do some balancing (over , under or both) and then do the stratifiedkfold validation. How would stratifiedkfold validation work in this case? Will stratifiedkfold validation take test data (lets say 10%) without balancing and build model on balanced dataset (90%), hence we would know the validation is done on real data? Or even validation is done on balanced data? If later, we would need a separate test dataset to see how model fits on real unbalanced dataset, isn't it? I hope its clear. thanks Sachin

Ответить

How different is this from in setting the stratify parameter (stratify=y) while splitting the data using train_test_split?

Ответить

sir in terms of skf you have miss the line nubmber 86. skf = StratifiedKFold()

Ответить

excellent work

Ответить

Can you provide the link of source code...

Ответить

Hello Sir, Stratified K fold works only for categorical and multiclass target variables. What if the target variable is continuous? Binning the target variable is the solution ?

Thanks

just awesome!!!!

But can it be possible that you also share GIT repository for its code

Hello Sir, Thanks for the wonderful explanation. However I have a naive question to ask, How is RandomizedSearchCV and GridSearchCV different from K-Fold, Stratified K-Fold?

Ответить

![Биология поведения человека Роберта Сапольски | Полное интервью [Vert Dider] 2019 Биология поведения человека Роберта Сапольски | Полное интервью [Vert Dider] 2019](https://invideo.cc/img/upload/OEswWEZjLVl5S1Y.jpg)