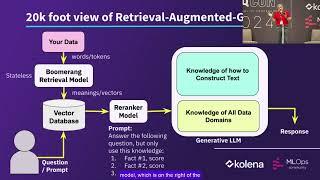

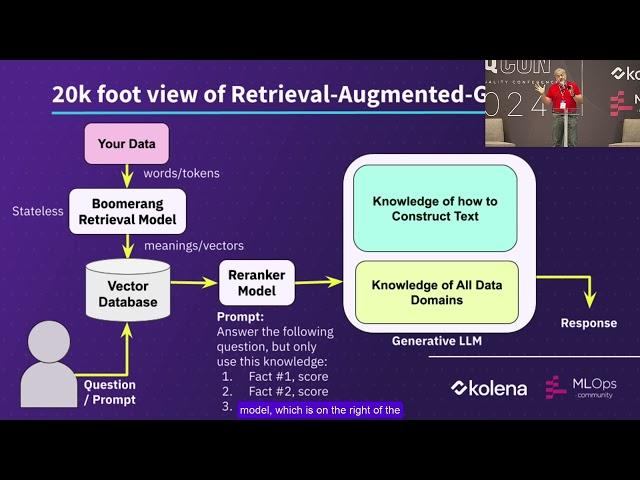

Dr. Amr Awadallah at The AI Quality Conference 2024

Комментарии:

Root any xiaomi with Twrp 2024 | 2 Methods

neifredomar

Bank Account Ka Balance Kaise Check Kare 2024 । How To Check Bank Balance In Mobile 2024

Saurav Online education

Judge Boyd Puts Hesitant Women in Her Place

Judge boyd TV

2025年の大予言。最強予言者が語る1年がヤバすぎる…【 都市伝説 予言 】

コヤッキースタジオ

Теперь Белю Деревья Только Так!

Дом, Сад, Огород PROGRESS WAY

Covered Calls -Tesla Pays Me 4% a month in income - RESULTS

Passive Income, Covered Calls & Stocks

DD Free Dish 2 Watch 118 New TV Channels on DD Free Dish New Satelite SCAN 12 November Kazsat3 58 E

SR Channel - Sahil Free Dish TV

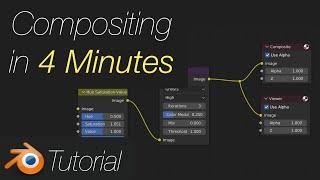

Blender Beginnner Tutorial: Compositing in 4 minutes

Olav3D Tutorials

McDonald's Big Mac Jingle Commercial (1974)

Bionic Disco