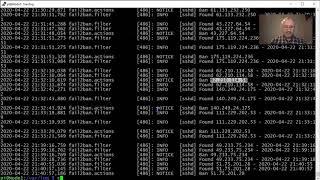

Filtering .log files, with cat, grep, cut, sort, and uniq

Комментарии:

I was searching for all command combinations in reading logs to extract an info. this video is great.

Ответить

Awesome video! Please don’t stop making Linux, bash, ethical hacking related videos. Thank you. Subscribed!! 😊

Ответить

One word.……….…………$Π¶€®

Ответить

thank you so much for this tutorial it helped me a lot with understanding of cat, grep and sort.

Are you able to tell me what this command would do "cat -rf ~/syslog | sort | grep -iaE -A 5 'cpu[1-7].*(7[0-9]|8[0-9]|100)' | tee cpu.txt" specifically the numbers after cpu which seem to me like it's a time stamp

thank you this is very helpful

Ответить

16 th field from experience still blow away

Ответить

You sir are incredible at teaching

Ответить

Awesome tutorial on cat and grep, Thanks...

Ответить

Great info and an enjoyable watch 👍👏

Ответить

Hi Sir, I have a log file which I cannot see after the command cd /var/log Please give me some suggestions thank you

Ответить

Gold sir 🔥

Ответить

Good

Ответить

From description: > "I show you how to filter information from a .log file, and you find out just how important strong passwords really are."

i always wondered that pattern matching has smth to do with password security, but then i thought, you have to have passwords to apply pattern matching on 'em right? 'cz the password input field of a site doesn't accept regex, and generating exhaustive strings from regex doesn't help either...

so, what are scenario we are imagining for talking about regex in context of secure passwords?

Simple and straightforward ❤

Ответить

Concept explained well in a short video.

Ответить

from the ip addres can you find out their location ?

Ответить

Thanks! That was informative. The only thing I would have done differently is flip the order of uniq -d and sort. Less items to sort after uniq filters them out.

Ответить

I am checking this video 3year after upload. The video tutorial is on point and clear.

Ответить

Great introduction to the topic, a few things that i think are worth mentioning, once people have learned the commands that were being demonstrated:

If the logs your using have a variable amount of spaces between columns (to make things look nice), that can mess up using cut, to get around that you can use `sed 's/ */ /g` to replace any n spaces in a row with a single space. You can also use awk to replace the sed/cut combo, but that's a whole different topic.

uniq also has the extremely useful -c flag which will add a count of how many instances of each item there were.

And as an aside if people wanted to cut down on the number of commands used you can do things like `grep expression filepath` or `sort -u` (on a new enough system), but in the context of this video it is probably better that people learn about the existence of the stand alone utilities, which can be more versatile.

Once you're confident in using the tools mentioned in the video, but you still find that you need more granularity than the grep/grep -v combo, you can use globbing, which involves special characters that represent concepts like "the start of a line"(^) or the wildcard "any thing"(*) (for example `grep "^Hello*World"` means any line that starts with Hello, and at some point also contains World, with anything or nothing in-between/after). If that still isn't enough you might want to look into using regular expressions with grep, but they can be even harder to wrap your mind around if you've never used them before. (If you don't understand globbing or re really are just from reading this that's fine, I'm just trying to give you the right terms to Google, because once you know something's name it becomes infinitely easier to find resources on them)

Now dump all the unique IPs into a text file, and run nslookup on each one. $50 says they all are located in China or Russia. At least %98-99 of them. At least that's what I always end up finding.

Ответить

😊 great videos 👍 thank you!!!

Ответить

you don't need cat, just use grep "string" auth.log also, you instead of cut, just use awk '{print $11}'

Ответить

Thanks.. very helpful and will be using this as a reference from now on

Ответить

nice , except cut -d " " -f x not working for me , i will dig durther to figure out why..

Ответить

Muy buen video, gracias por compartir, saludos desde México

Ответить

thanks for the amazing video

love it <3

Thankyou this video was exactly what i needed

Ответить

Good vid, thank you

Ответить

To filter .log files using cat, grep, cut, sort, and uniq commands, follow these steps:

1. First, open your terminal or command prompt.

2. Navigate to the directory containing the .log files you want to filter. You can use the 'cd' command followed by the directory path. For example:

```bash

cd /path/to/your/log/files

```

3. Use the 'cat' command to concatenate and display the contents of a .log file. For instance:

```bash

cat your_log_file.log

```

4. To search for specific lines in the .log file, use the 'grep' command. For example, if you want to find all lines containing the word 'error', you can use:

```bash

grep 'error' your_log_file.log

```

5. If you want to extract specific columns from the output, use the 'cut' command. The format is 'cut -d delimiter -f fields'. For example, if your log file has columns separated by a space and you want to extract the first column, use:

```bash

cut -d ' ' -f1

```

6. To sort the lines alphabetically or numerically, use the 'sort' command. For example:

```bash

sort your_log_file.log

```

7. Finally, to remove duplicate lines from the sorted output, use the 'uniq' command. For example:

```bash

uniq your_log_file.log

```

By combining these commands, you can create a pipeline to filter .log files effectively. For instance:

```bash

cat your_log_file.log | grep 'error' | cut -d ' ' -f1 | sort | uniq

```

This command will display unique first columns from lines containing the word 'error' in your_log_file.log.

hOW CAN I SEE ALL FILES ON HARD DRIVE OR USB ? AND HOW COULD DECRYPTED FILES BE ERASED OR OVERWRITE WITH SUDO SHRED ?

Ответить

genius

Ответить

Thank you!

Ответить

sir can we use awk instead of cut?

Ответить

For compressed files, zcat zgrep

Ответить

Thx. Very helpful.

Ответить

You could configure fail2ban not only for sshd but also for nginx requests to catch 400-404 errors.

Ответить

this one is one of the most helpful tutorials out there that show how powerful grep and pipe are. Thanks for sharing that and I hope you make more cool stuff.

Ответить

awesome video

Ответить

Without changing directory how can we do

Ответить

this is exactly what I was looking for and even more! thank you so much!

Ответить

REALLY HELPED THANK YOU SO MUCH

Ответить

If I wanted to count the number of times that each unique instance showed up. What would I do for that? Would I do the unique and then do the word count for each instance by using grep for that specific phrase?

Ответить

hi do you know how to copy log file from cowrie honeypot is on?

Ответить

I’m on windows and I’m currently tasked with finding stuff for a log file they gave me

Ответить

Thanks for this one. Helped a lot

Ответить

One of the best video walkthroughs of all time.

Ответить

Thank you for making learning for my university studies much easier!

Ответить

You try to access his server you will have your public ip posted on internet

Ответить

![E.R.N.E.S.T.O - Not Again [Dirtybird] E.R.N.E.S.T.O - Not Again [Dirtybird]](https://invideo.cc/img/upload/WTVnMTlUNUJabmM.jpg)