How do software projects achieve zero downtime database migrations?

Комментарии:

The Problem with this is that Users who are logged in the SaaS and doing there stuff are using the old API. If you update the System then you expect that evey user is using the new API. What are you going to do with the Users who are still logged in the "old" UI that uses the old API? The old API needs to be working.

Ответить

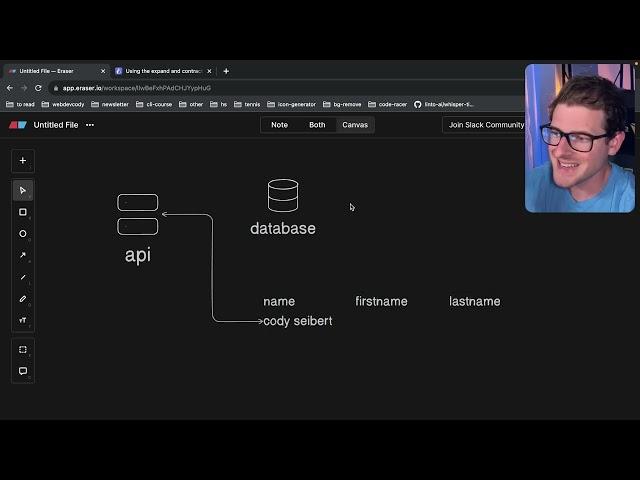

Hello, for example if I have a worker process that update the "first name" and "last name" column from the original "name" column - Maybe it will incur a write-lock on that row, unfortunately at the same time a user is changing that row? What would happen in this case?

Ответить

can you tell about database migration in detail like what happen to the data present in the earlier database while user requesting that, do we replicate whole data from earlier database to new database and then divert user requests?

Ответить

Thanks for this great video

Ответить

That's like the natural thing to do. That is how I handle migrations. Great content!

Ответить

Thanks, Cody. You are a genius! I am curious to know how I could migrate a production WordPress database to another without downtime. Your insights would be much appreciated as always.

Ответить

At our company, we use a more sophisticated tool called gh-ost from GH. It's pretty awesome and let's us run migrations without worrying about the expand/contract method you mentioned. I believe FB has a similar lib but the same proces. It creates a new replica db with the migrations applied on an empty table. It slowly copies rows over and applies the necessary changes by using a binary log stream to keep track of them. You also have the ability to throttle, run the migration in noop mode and a lot more.

Ответить

We use a middleware approach to facilitate "preping" the data for an upgrade... Normally, a process will run to do the updates on the names (we don't use database script because of excessive locks), in conjunction with this, middleware can decide (a) read from the name, and (b) update both sides (if the customer sent an update)... it will update the name field, but also the first/last name fields. This normally takes longer than a database script but ensures a smoother transition. Once all records are updated the same middleware can be switched to read from first/last name fields. This is also true when doing new complex indexes... sometimes we have to move the data from field A to field B (field with index). and have the middleware select/update the proper target field.

If you use a Node/Express "getCustomer" route, you can do a quick "middleware" between the user request and the actual database.

ALways good contet from you Cody! Jah bless!

Ответить

Speaking of databases, what is your experience with connecting relational databases to severless functions? from what I understand databases are not meant to handle many connection requests, so they don't scale well. The likes of PlanetScale and Supabase are there to plug this gap, but they can be pricy.

Ответить

This is normally called “3 steps migration”. The one thing wrong in your video - on 1st step you write to both old and new schema

Ответить

I honestly gotta ask our admins how we do it exactly, especially when tables with 10s and 100s of billions of rows are involved. I think it was done on one of the replicas first which then becomes the new master after deployment etc.

Ответить

I was literally doing some research on this topic today. Thank you so much, we want more content like this!

Ответить

Ok, so your first name is Cody...

Ответить

In your example, if writing new data into the two new columns, but reading is still done from the old column, wouldn't the user get stale data? Imagine you update your first name in an app and after reloading the page it still shows the old name, no? The solution to that is to write the new data not only to the new columns, but also the old column, right? In the process you described, I don't think you can skip this step (continue writing to the old column) without having stale data.

Ответить

next time please put the prisma link into the video description so we don't have to type it down from the video

Ответить

Are there scenarios in which creating a fully separate database instance with the modified schema is more effective for migrations? My assumption is that it is easier to handle zero downtime, testing, and rollbacks by just switching the database connection. I suppose this is also more appropriate for complicated schema changes but is more expensive

Ответить

I once migrated MongoDB to Postgres. Had to export the data from MongoDB, write a separate piece of code that reads exported data, transforms it to a Postgres data model and inserts it. But we had downtime. Now I am wondering if this was possible without downtime. Ok, it sure was possible. Just wondering if it was worth it. I guess it all comes down to whether potential downtime of X minutes is more expensive for the company then Y more hours invested into Backend-ing and DevOps-ing. Since it was relatively small business (~90k users) I guess it's not the end of the world if you have downtime. But if you are Netflix then of course, 1 minute of downtime can cost you millions and millions.

Ответить

cody, you are like a window to the gated community of production grade software and service design. your work is always appreciated.

Ответить

Why not just update the database incrementally and then swap the api? If database was updated anyways for less frequent users then I didn't quite understand reason for the first part.

Ответить

Can confirm, this is what we do as well. We usually keep [and keep updating] the old column for 1-2 iterations, in case some unexpected issue shows up. Just make sure to actually schedule deleting it eventually, otherwise it can become very confusing.

Ответить

Niiiice babe!!! Good job!

Ответить