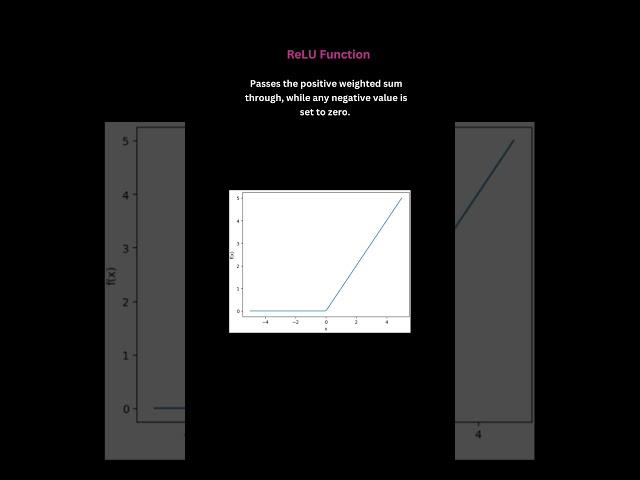

ReLU Activation Function Explained! #neuralnetwork #ml #ai

The rectified linear unit (ReLU) activation function is a non-linear function that is commonly used in artificial neural networks. It is defined as follows:

f(x) = max(0, x)

In other words, the ReLU function outputs the input value if it is positive, and 0 if it is negative. This makes it a non-linear function, which is important for neural networks to learn complex patterns.

f(x) = max(0, x)

In other words, the ReLU function outputs the input value if it is positive, and 0 if it is negative. This makes it a non-linear function, which is important for neural networks to learn complex patterns.

Тэги:

#relu_activation_function #relu_in_neural_networks #relu #relu_activation_function_in_neural_networks #ReLU_activation #what_is_relu #rectified_linear_unit #rectified_linear_unit_activation_functionКомментарии:

ReLU Activation Function Explained! #neuralnetwork #ml #ai

UncomplicatingTech

Ben 10 | Hero Experience | Cartoon Network

Cartoon Network UK

How Come There Are Horses in Warhammer 40K

OneMindSyndicate

How to Switch Microphone on AirPods Pro

Tech Tips

Kimchi (ft. Roy Choi) | Basics with Babish

Babish Culinary Universe