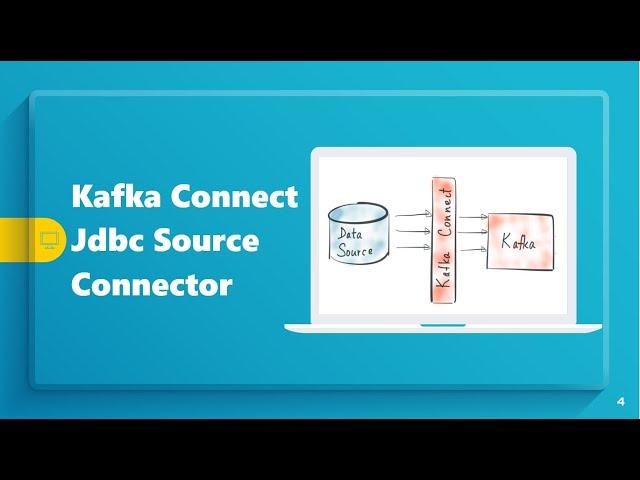

Source MySQL table data to Kafka | Build JDBC Source Connector | Confluent Connector | Kafka Connect

Комментарии:

Great video! Can you help with connecting to snowflake as source?

Ответить

Sir nice video, but atleast you can upload the code link.

Ответить

Dear Vishal,

Thank you for your effort & time to produce & upload these tutorials.

Please, what is the best method or connector available to pull or ingest data that normally get updated or changed. For example bank accounts statement.

Thanks.

Very funny :-))) Love it!!! Do not waste your time > P-R-O-M-O-S-M !!

Ответить

how to download kafka connect JDBC COnnector

Ответить

Could you please provide me the link

Ответить

Can you please guide me how we do this in local instead of confluent cloud?

What I want to do is like to connect database with kafka connect and the data gets inserted into kafka cluster

from oracle, it is unable to fetch data. Any license issue?

Ответить

My Confluent installation dont have JDBC source connector by default , Please help me how to install ?

Ответить

Thanks for the video , Vishal. I wanted to know that , we can also source Oracle DB using JDBC Source Connector too, isn't it? And where did you deploy the connector config file? In the Confluent cloud?

Ответить

I'm beginner on kafka and DB concepts. What is dialect exactly in this case ?

Ответить

Can anyone would paste the config properties in comment.

Ответить

Sir Can you provide the jdbc driver link

Ответить

wtf , you are good

Ответить

Hi Vishal,

I am facing issue Invalid connector configuration: There are 2 fields that require your attention

while connecting to mysql db ..is this required mysql jdbc configuration as well ? if yes how we can do that ?

Hi Vishal, thank you very much for this! :)

I have 1 question, how do you installed the connector plugin for sink ?

Could you please do a Video loading tables into their respective topics for each tables , using SQL SERVER as a source??.... so everytime each table suffers a DML action the new event s sent to the Confluent plataform..

Ответить

hi

by using your code connector is got connected but status is got failed and no data is fetched from db can i know what was the reason

Hi Vishal,

Could you please help me with Snowflake Source connector via (JDBC) to unload the data to Confluent Kafka.

If there's any sample code please share me the github details.

hi Vishal, when i want to upload the connector i faced the issue "invalid connector class" i need advice. thanks

Ответить

Hi, Vishal , Could you please help me in pushing confluent topic data into scylladb table...

Ответить

Nice video bro. It worked for me. One quick question for you:

How to run query like this:

select id,CUST_LN_NBR FROM flex_activity limit 1;

In this query I am using limit option in due to that it is getting failed. If I use simple query without limit then it works fine:

Error I am getting:

org.apache.kafka.connect.errors.ConnectException: java.sql.SQLSyntaxErrorException: You have an error in your SQL syntax; check the manual that corresponds to your MySQL server version for the right syntax to use near 'WHERE `ID` > -1 ORDER BY `ID` ASC' at line 1

This is not only with limit option, this is for all whenever you have to apply any filter.

Very helpful video. I see the 'timestamp.column.name' should have been TXN_DATE (as flashed up on video) otherwise update wont work. It would have been nice to see this working at the end in addition to adding new records

Ответить

Hey, i tried to connect to postgreSQL and was finally landing with below error in logs

Caused by: org.apache.kafka.common.errors.SerializationException: Error serializing Avro message

Caused by: org.apache.kafka.connect.errors.DataException: Failed to serialize Avro data from topic <topic_name>

any help ?

Hi Vishal thanks for sharing videos. Please let me know where can i check the errors/logs when connectors failed? also logs/errors if any issues in processing data. Please share me paths

Ответить

thanks i need your email please

Ответить

can you give us the link for the config file ?

Ответить

AWESOME !!.Can you also upload video on sink connector to oracle database or any rdbms

Ответить

Really cool bro!

Ответить

Thank for the great tutorial. Did you have to create the avro schema or it gets generated?

Ответить

Most sensible video on Kafka topic, thank you very much.

Ответить

![[FREE] Gunna x Roddy Ricch Type Beat 2019 "AP" | Smooth Trap Type Beat / Instrumental [FREE] Gunna x Roddy Ricch Type Beat 2019 "AP" | Smooth Trap Type Beat / Instrumental](https://invideo.cc/img/upload/Z2o1UmR3SEhoVFo.jpg)