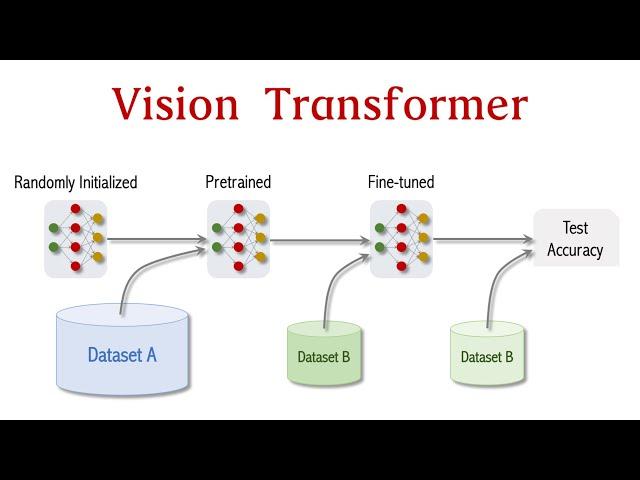

Vision Transformer for Image Classification

Комментарии:

Excellent explanation 👌

Ответить

You have explained ViT in simple words. Thanks

Ответить

Nice video!!, Just a question what is the argue behind to rid of the vectors c1 to cn, and just remain with c0? Thanks

Ответить

This is a werid question but what if we made the transformer try to learn the positional encoding by itself? We give it everything but the positional encoding and we tell it to find the positional encoding. What would it learn and how would that end up helping us? I dont know if this thought will go anywhere I wanted to throw it out here.

Ответить

Nicely explained. Appreciate your efforts.

Ответить

Thank you for the clear explanation!!☺

Ответить

Thank you. Best ViT video I found.

Ответить

In the job market, do data scientists use transformers?

Ответить

amazing precise explanation

Ответить

good video ,what a splendid presentation , wang shusen yyds.

Ответить

👏

Ответить

Thank you for your Attention Models playlist. Well explained.

Ответить

Man, you made my day! These lectures were golden. I hope you continue to make more of these

Ответить

great

Ответить

These are some of the best, hands-on and simple explanations I've seen in a while on a new CS method. Straight to the point with no superfluous details, and at a pace that let me consider and visualize each step in my mind without having to constantly pause or rewind the video. Thanks a lot for your amazing work! :)

Ответить

Can't stress enough on how easy to understand you made it

Ответить

Awesome Explanation.

Thank you

This is a great explanation video.

One nit : you are misusing the term 'dimension'. If a classification vector is linear with 8 values, that's not '8-dimensional' -- it is a 1-dimensional vector with 8 values.

Brilliant. Thanks a million

Ответить

WHY is the transformer requiring so many images to train?? and why is resnet not becoming better with ore training vs ViT?

Ответить

If we ignore output c1 ... cn, what c1 ... cn represent then?

Ответить

Very good explanation

subscribed!

这英语也是醉了

Ответить

其实我觉得up主说中文更好🥰🤣

Ответить

15 minutes of heaven 🌿. Thanks a lot understood clearly!

Ответить

Thank you so much for this amazing presentation. You have a very clear explanation, I have learnt so much. I will definitely watch your Attention models playlist.

Ответить

really great explaination , thankyou

Ответить

great video!

Ответить

Not All Heroes Wear Capes <3

Ответить

Brilliant explanation, thank you.

Ответить

Amazing, I am in a rush to implement vision transformer as an assignement, and this saved me so much time !

Ответить

Great explanation

Ответить

great video. thanks. could u plz explain swin transformer too?

Ответить

Very clear, thanks for your work.

Ответить

The simplest and more interesting explanation, Many Thanks. I am asking about object detection models, did you explain it before?

Ответить

Wonderful talk

Ответить

Good job! Thanks

Ответить

Very clear, thanks for your work.

Ответить

Really good, thx.

Ответить

Very nice job, Shusen, thanks!

Ответить

Great great great

Ответить

This was a great video. Thanks for your time producing great content.

Ответить

Great explanation :)

Ответить

The concept has similarities to TCP protocol in terms of segmentation and positional encoding. 😅😅😅

Ответить

This reminds me of Encarta encyclopedia clips when I was a kid lol! Good job mate!

Ответить

If you remove the positional encoding step, the whole thing is almost equivalent to a CNN, right?

I mean those dense layers are just as filters of a CNN.

Thank you, your video is way underrated. Keep it up!

Ответить

Amazing video. It helped me to really understand the vision transformers. Thanks a lot. But i have a question why we only use token cls for classifier .

Ответить